Description

Group project for a computer vision course taken at Sapienza. The task was to pre-process and extract features from the FaceForensics++ dataset, use these features to fine-tune a transformer model for DeepFake detection, and then investigate some model compression techniques. I worked on extracting depth estimates from the pre-processed video frames and fine-tuned some transformer models. Other group members worked on the pre-processing, extracting optical flows, and model compression using quantisation and distillation.

Inspiration

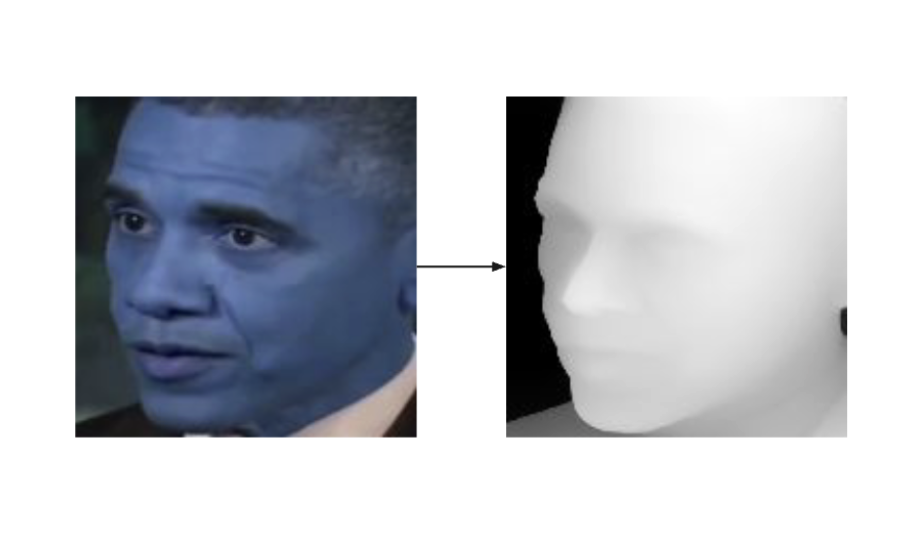

The pipeline was based on an existing depth-based method which used a pre-trained CNN to estimate depths and used these depths to fine-tune another CNN for real/fake classification.

Process

I used Depth Anything V2 to estimate the depths of each pre-processed video frame, normalised them, and saved them as grayscale images. This was done to prevent introducing artefacts with colour maps.

The transformer models investigated (DINOv2, ViT, Swin) all take RGB images as input so the grayscale channel was replicated three times to generate pseudo-RGB images. Computational resources limited the amount of data that could be used for training and the number of epochs that could be run, hence there were no noticeable differences between the three transformer models during training.

Learnings

Using DINOv2, the various depth-based models (uncompressed and compressed) were able to achieve validation accuracies of 60-70% when trained on a limited subset of the FaceForensics++ dataset. This was better than the flow-based model but both models showed signs of overfitting.

Future work includes training on more data to combat overfitting and combining the optical flow and depth features to hopefully lead to more robust classification.